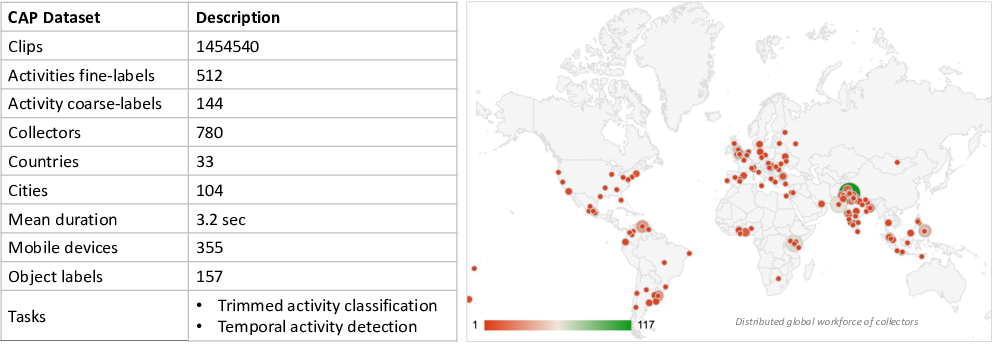

The Consented Activities of People (CAP) dataset is a fine grained activity dataset for visual AI research curated using the Visym Collector platform. The CAP dataset contains annotated videos of fine-grained activity classes of consented people. Videos are recorded from mobile devices around the world from a third person viewpoint looking down on the scene from above, containing subjects performing every day activities. Videos are annotated with bounding box tracks around the primary actor along with temporal start/end frames for each activity instance, and distributed in vipy json format. An interactive visualization and video summary is available for review below.

The CAP dataset was collected with the following goals:

This dataset is associated with the:

The dataset explorer shows a 4% sample of the CAP dataset, tightly cropped spatially around the actor and cropped temporally around the fine-grained activity being performed. The full dataset includes the larger spatiotemporal context in each video around the activity, and the complete set of activity labels. This open source visualization tool can be sorted by category or color, and shown in full screen.

This video visualization shows a sample of 40 activities each from 28 collectors showing the tight crop around the actor. We also provide visualization of a random sample of full context videos available in the training/validation set and 5Hz background stabilized videos.

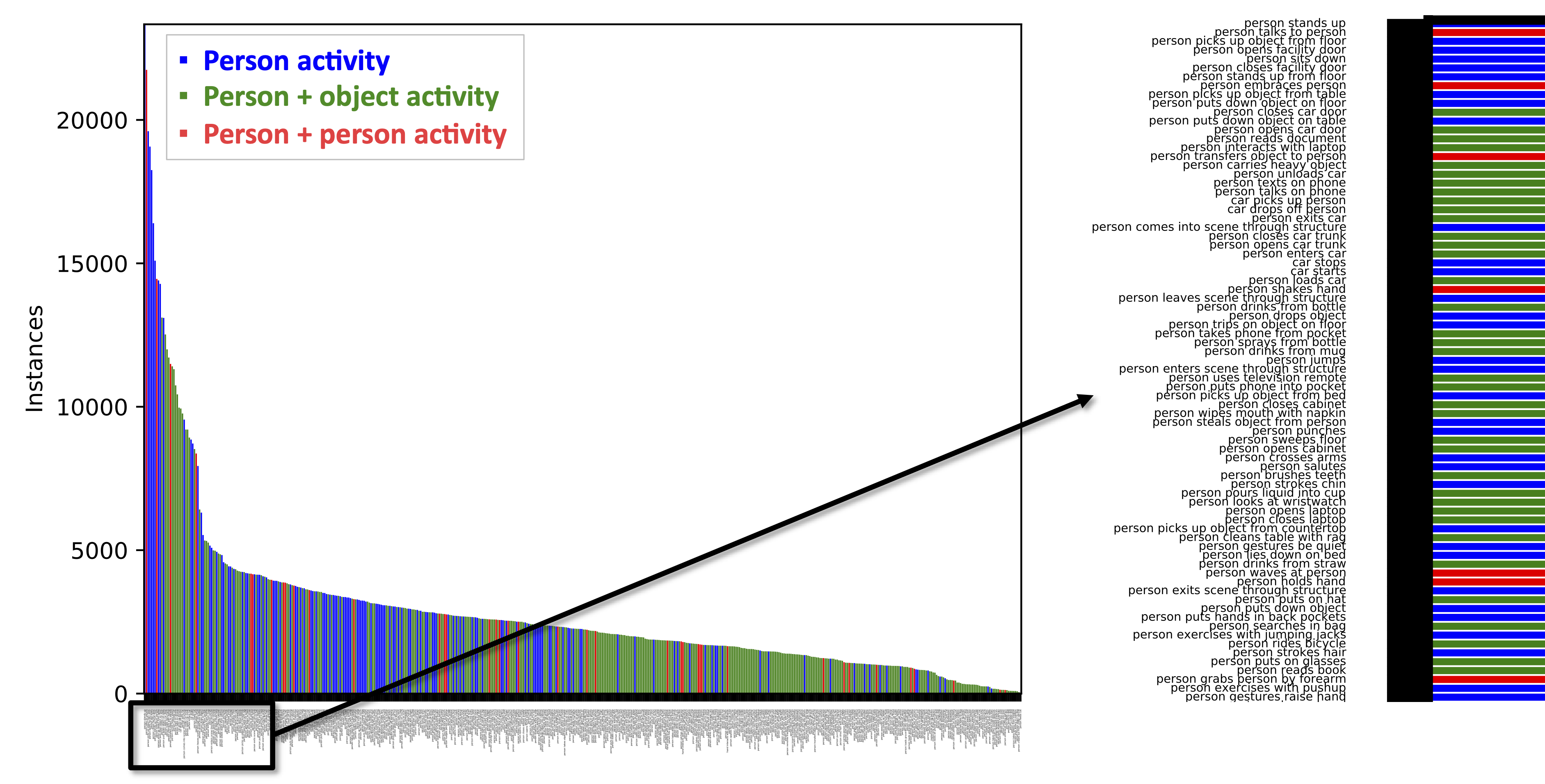

This summary shows the statistics of the entire CAP dataset which includes activity classification and activity detection subsets as well as sequestered test sets. The public training/validation sets for specific tasks will be smaller than these totals.

Creative Commons Attribution 4.0 International (CC BY 4.0). Every subject in this dataset has consented to their personally identifable information to be shared publicly for the purpose of advancing computer vision research. Non-consented subjects have their faces blurred out.

Jeffrey Byrne (Visym Labs), Greg Castanon (STR), Zhongheng Li (STR) and Gil Ettinger (STR)

“Fine-grained Activities of People Worldwide”, arXiv:2207.05182, 2022

@article{Byrne2023Fine,

title = “Fine-grained Activities of People Worldwide”,

author = “J. Byrne and G. Castanon and Z. Li and G. Ettinger”,

journal = “Winter Applications of Computer Vision (WACV’23)”,

year = 2023

}

Supported by the Intelligence Advanced Research Projects Activity (IARPA) via Department of Interior/ Interior Business Center (DOI/IBC) contract number D17PC00344. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon. Disclaimer: The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of IARPA, DOI/IBC, or the U.S. Government.

We thank the AWS Open Data Sponsorship Program for supporting the storage and distribution of this dataset.

Visym Labs <info@visym.com>