People in Public - 370k - Stabilized

Overview

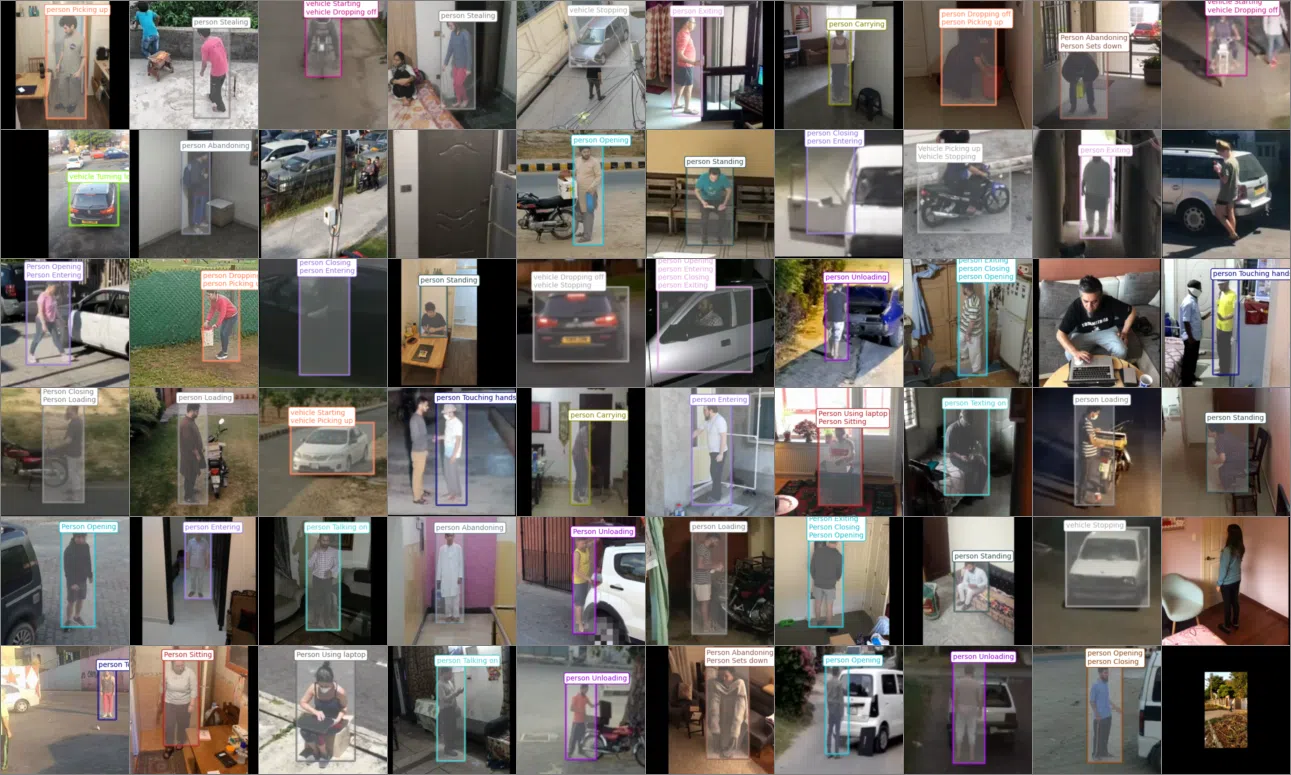

The People in Public dataset is a consented large scale video dataset of people doing things in public places. Our team has pioneered the use of a custom designed mobile app that combines video collection, activity labeling and bounding box annotation into a single step. Our goal is to make collecting annotated video datasets as easily and cheaply as recording a video. Currently, we are collecting a dataset of the MEVA classes (http://mevadata.org). This dataset contains 405,781 background stabilized video clips of 63 classes of activities collected by over 150 subjects in 44 countries around the world.

Download

- pip_370k.tar.gz (226 GB) MD5:2cf844fbc78fde1c125aa250e99db19f Last Updated 16Sep21

- This dataset contains 405,781 background stabilized video clips of 63 classes of activities.

- This release uses the no-meva-pad annotation style.

- This is the recommended dataset for download, as it is the union of pip-170k-stabilized, pip-250k-stabilized and pip-d370k-stabilized, which fixes known errata.

- The annotations of the pip-175k subset can be downloaded separately. Last Updated 16Apr23

Quickstart

See pip-175k.

To extract the smallest square video crop containing the stabilized track for a vipy.video.Scene() object v:

import vipy

v = vipy.util.load('/path/to/stabilized.json')[0] # load videos and take one

vs = v.crop(v.trackbox(dilate=1.0).maxsquare()).resize(224,224).saveas('/path/to/out.mp4')

vs.getattribute('stabilize') # returns a stabilization residual (bigger is worse)

Best Practices

Notebook demo [html][ipynb] showing best practices for using the PIP-175k dataset for training.

Errata

- A small number of videos exhibit a face detector false alarm which looks like a large pixelated circle which lasts a single frame. This is the in-app face blurring incorrectly redacting the background. You can filter these videos by removing videos v with

videolist = [v for v in videolist if not v.getattribute('blurred faces') > 0]

- The metadata for each video in PIP-370k contains unique IDs that identify the collector who recorded the video and the subject in the video. A portion of the PIP-370k collection included a bug that caused subject IDs to be randomly generated. We recommend using the collector ID as the identifier of the subject. Collectors and subjects were required to work in pairs for this collection, so the collector ID uniquely identifies when the collector is behind the camera and their subject is in front of the camera. This means that collector_id for this collection will uniquely identify the subject in the pixels. This can be accessed using the following for a video object v:

v.metadata()['collector_id']

Frequently Asked Questions

- Are there repeated instances in this release? For this release, we asked collectors to perform the same activity multiple times in a row per collection, but to perform the activity slightly differently each time. This introduces a form of on-demand dataset augmentation performed by the collector. You may identify these collections with a filename structure “VIDEOID_INSTANCEID.mp4” where the video ID identifies the collected video, and each instance ID is an integer that identifies the order of the collected activity in the collected video.

- Are there missing activities? This is an incremental release, and should be combined with pip-250k for a complete release.

- What is “person_walks”? This is a background activity class. We asked collectors to walk around and act like they were waiting for a bus or the subway, to provide a background class. The collection names in the video metadata for these activities are “Slowly walk around in an area like you are waiting for a bus” and “Walk back and forth”.

- Do these videos include the MEVA padding? No, these videos are collected using the temporal annotations from the collectors directly. This is due to the fact that many of the activities are collected back to back in a single collection, which may violate the two second padding requirement for MEVA annotations. If the MEVA annotations are needed, they can be applied as follows to a list of video objects (videolist):

padded_videolist = pycollector.dataset.asmeva(videolist)

- How is this related to pip-175k and pip-250k? This dataset is a superset of pip-175k and pip-250k.

- How is background stabilization performed? Background stabilization was performed using an affine coarse to fine optical-flow method, followed by actor bounding box stabilization. Stabilization is designed to minimize distortion for small motions in the region near the center of the actor box. Remaining stabilization artifacts are due to non-planar scene structure, rolling shutter distortion, and sub-pixel optical flow correspondence errors. Stabilization artifacts manifest as a subtly shifting background relative to the actor which may affect optical flow based methods. All stabilizations can be filtered using the provided stabilization residual which measures the quality of the stabilization.

License

Creative Commons Attribution 4.0 International (CC BY 4.0)

Every subject in this dataset has consented to their personally identifable information to be shared publicly for the purpose of advancing computer vision research. Non-consented subjects have their faces blurred out.

Acknowledgement

Supported by the Intelligence Advanced Research Projects Activity (IARPA) via Department of Interior/ Interior Business Center (DOI/IBC) contract number D17PC00344. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon. Disclaimer: The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of IARPA, DOI/IBC, or the U.S. Government.

Contact

Visym Labs <info@visym.com>